Version: v1.0

Input

Output

This example was created by evevalentine2017

Finished in 39.4 seconds

Setting up the model...

Preparing inputs...

Processing...

Loading VAE weight: models/VAE/sdxl_vae.safetensors

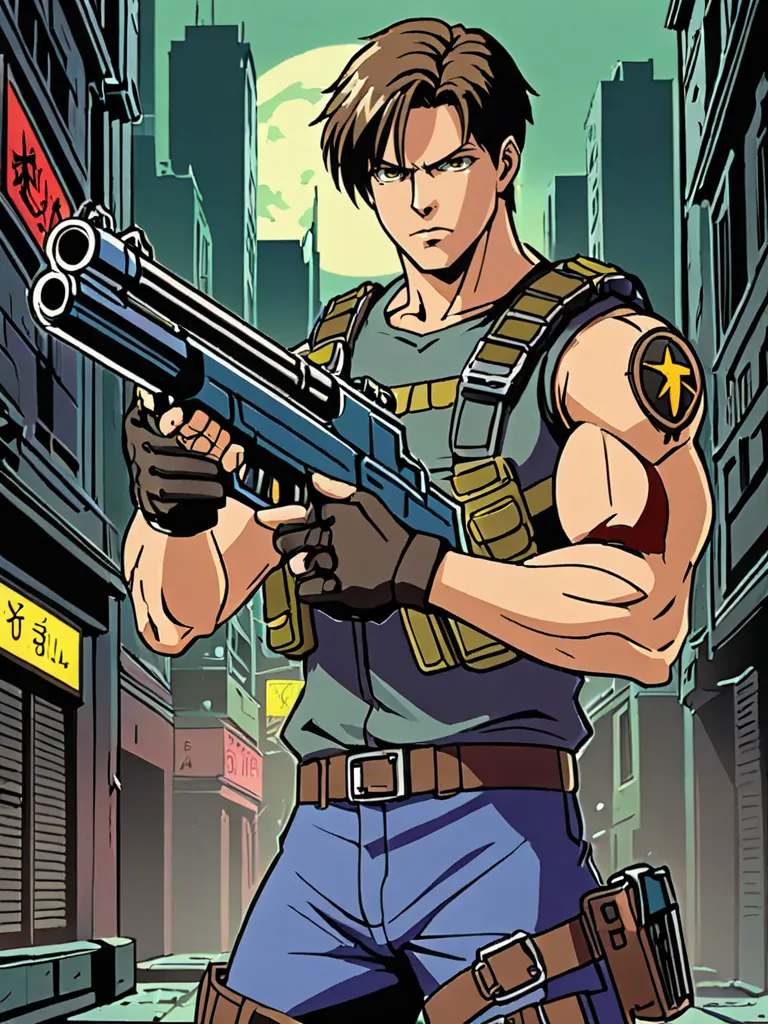

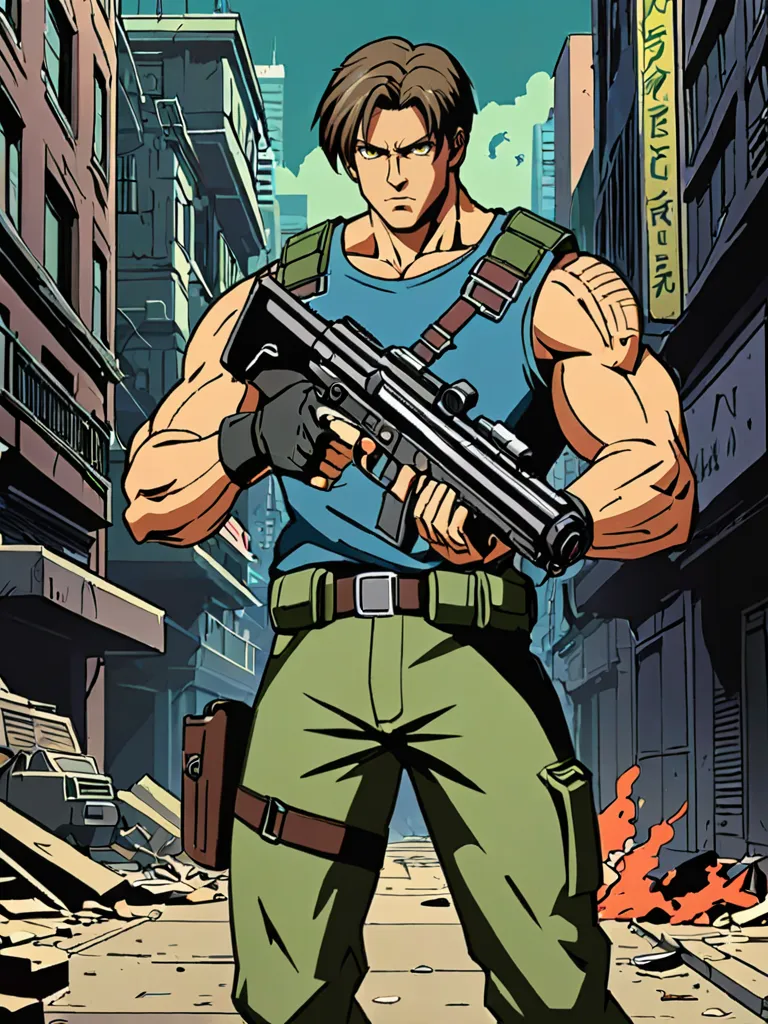

Full prompt: Anime, plain colors, flat colors, celshade, best quality, HUNK from Resident Evil, holding weapon, gun, dark city background, horror theme

Full negative prompt: negativeXL_D, realistic, real photo, textured skin, realism, 3d, volumetric, looking at the viewer, nipples, nsfw, (worst quality, low quality:1.0), easynegative, (extra fingers, malformed hands, polydactyly:1.5), blurry, watermark, text

0%| | 0/10 [00:00<?, ?it/s]

10%|█ | 1/10 [00:01<00:14, 1.56s/it]

20%|██ | 2/10 [00:03<00:14, 1.80s/it]

30%|███ | 3/10 [00:05<00:13, 1.91s/it]

40%|████ | 4/10 [00:07<00:11, 1.94s/it]

50%|█████ | 5/10 [00:09<00:09, 1.95s/it]

60%|██████ | 6/10 [00:11<00:07, 1.94s/it]

70%|███████ | 7/10 [00:13<00:05, 1.89s/it]

80%|████████ | 8/10 [00:14<00:03, 1.81s/it]

90%|█████████ | 9/10 [00:16<00:01, 1.64s/it]

100%|██████████| 10/10 [00:17<00:00, 1.42s/it]

100%|██████████| 10/10 [00:17<00:00, 1.71s/it]

Decoding latents in cuda:0...

done in 1.74s

Move latents to cpu...

done in 0.02s

0: 640x480 1 face, 158.3ms

Speed: 2.5ms preprocess, 158.3ms inference, 22.4ms postprocess per image at shape (1, 3, 640, 480)

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:00<00:03, 1.25it/s]

40%|████ | 2/5 [00:01<00:02, 1.42it/s]

60%|██████ | 3/5 [00:02<00:01, 1.55it/s]

80%|████████ | 4/5 [00:02<00:00, 1.77it/s]

100%|██████████| 5/5 [00:02<00:00, 2.09it/s]

100%|██████████| 5/5 [00:02<00:00, 1.80it/s]

Decoding latents in cuda:0...

done in 0.57s

Move latents to cpu...

done in 0.01s

0: 640x480 1 face, 8.0ms

Speed: 2.3ms preprocess, 8.0ms inference, 1.3ms postprocess per image at shape (1, 3, 640, 480)

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:00<00:02, 1.48it/s]

40%|████ | 2/5 [00:01<00:01, 1.55it/s]

60%|██████ | 3/5 [00:01<00:01, 1.64it/s]

80%|████████ | 4/5 [00:02<00:00, 1.84it/s]

100%|██████████| 5/5 [00:02<00:00, 2.16it/s]

100%|██████████| 5/5 [00:02<00:00, 1.90it/s]

Decoding latents in cuda:0...

done in 0.57s

Move latents to cpu...

done in 0.0s

0: 640x480 1 face, 7.7ms

Speed: 2.3ms preprocess, 7.7ms inference, 1.3ms postprocess per image at shape (1, 3, 640, 480)

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:00<00:02, 1.39it/s]

40%|████ | 2/5 [00:01<00:01, 1.50it/s]

60%|██████ | 3/5 [00:01<00:01, 1.60it/s]

80%|████████ | 4/5 [00:02<00:00, 1.80it/s]

100%|██████████| 5/5 [00:02<00:00, 2.12it/s]

100%|██████████| 5/5 [00:02<00:00, 1.85it/s]

Decoding latents in cuda:0...

done in 0.57s

Move latents to cpu...

done in 0.0s

Uploading outputs...

Finished.

This example was created by evevalentine2017

Finished in 39.4 seconds

Setting up the model...

Preparing inputs...

Processing...

Loading VAE weight: models/VAE/sdxl_vae.safetensors

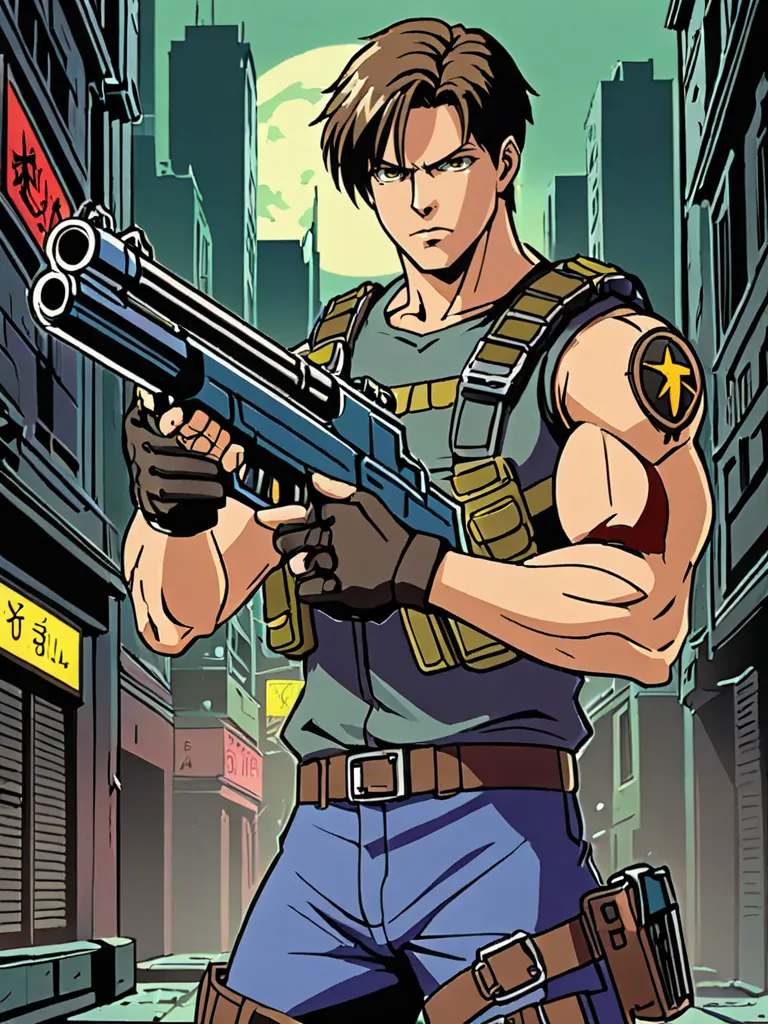

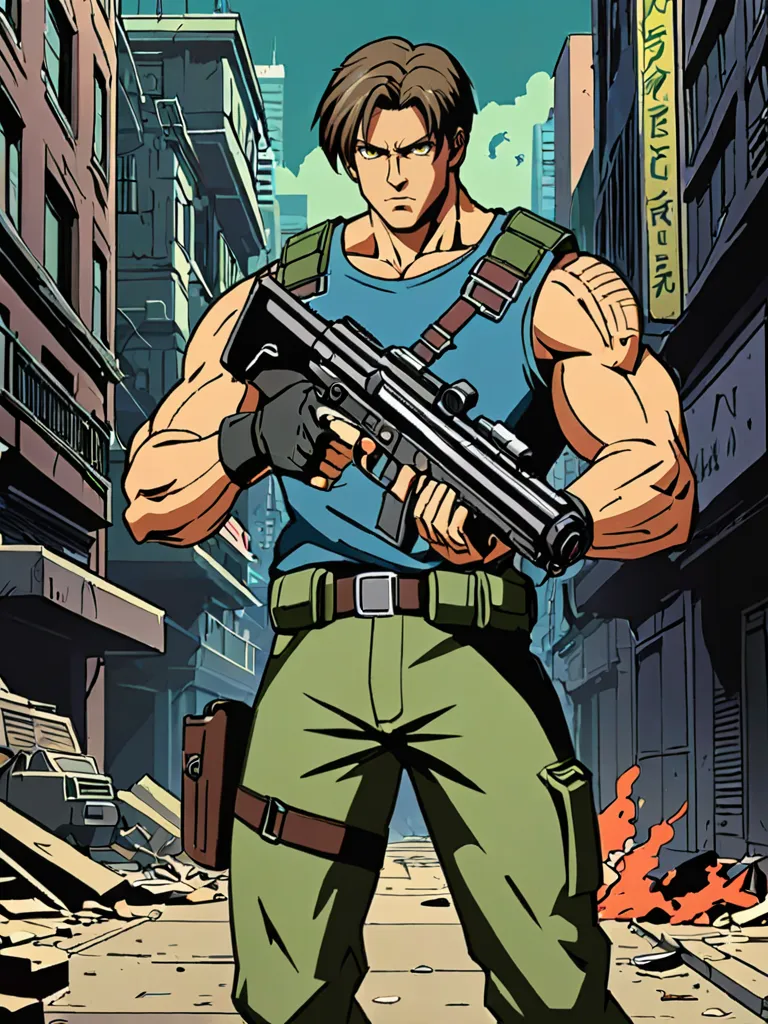

Full prompt: Anime, plain colors, flat colors, celshade, best quality, HUNK from Resident Evil, holding weapon, gun, dark city background, horror theme

Full negative prompt: negativeXL_D, realistic, real photo, textured skin, realism, 3d, volumetric, looking at the viewer, nipples, nsfw, (worst quality, low quality:1.0), easynegative, (extra fingers, malformed hands, polydactyly:1.5), blurry, watermark, text

0%| | 0/10 [00:00<?, ?it/s]

10%|█ | 1/10 [00:01<00:14, 1.56s/it]

20%|██ | 2/10 [00:03<00:14, 1.80s/it]

30%|███ | 3/10 [00:05<00:13, 1.91s/it]

40%|████ | 4/10 [00:07<00:11, 1.94s/it]

50%|█████ | 5/10 [00:09<00:09, 1.95s/it]

60%|██████ | 6/10 [00:11<00:07, 1.94s/it]

70%|███████ | 7/10 [00:13<00:05, 1.89s/it]

80%|████████ | 8/10 [00:14<00:03, 1.81s/it]

90%|█████████ | 9/10 [00:16<00:01, 1.64s/it]

100%|██████████| 10/10 [00:17<00:00, 1.42s/it]

100%|██████████| 10/10 [00:17<00:00, 1.71s/it]

Decoding latents in cuda:0...

done in 1.74s

Move latents to cpu...

done in 0.02s

0: 640x480 1 face, 158.3ms

Speed: 2.5ms preprocess, 158.3ms inference, 22.4ms postprocess per image at shape (1, 3, 640, 480)

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:00<00:03, 1.25it/s]

40%|████ | 2/5 [00:01<00:02, 1.42it/s]

60%|██████ | 3/5 [00:02<00:01, 1.55it/s]

80%|████████ | 4/5 [00:02<00:00, 1.77it/s]

100%|██████████| 5/5 [00:02<00:00, 2.09it/s]

100%|██████████| 5/5 [00:02<00:00, 1.80it/s]

Decoding latents in cuda:0...

done in 0.57s

Move latents to cpu...

done in 0.01s

0: 640x480 1 face, 8.0ms

Speed: 2.3ms preprocess, 8.0ms inference, 1.3ms postprocess per image at shape (1, 3, 640, 480)

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:00<00:02, 1.48it/s]

40%|████ | 2/5 [00:01<00:01, 1.55it/s]

60%|██████ | 3/5 [00:01<00:01, 1.64it/s]

80%|████████ | 4/5 [00:02<00:00, 1.84it/s]

100%|██████████| 5/5 [00:02<00:00, 2.16it/s]

100%|██████████| 5/5 [00:02<00:00, 1.90it/s]

Decoding latents in cuda:0...

done in 0.57s

Move latents to cpu...

done in 0.0s

0: 640x480 1 face, 7.7ms

Speed: 2.3ms preprocess, 7.7ms inference, 1.3ms postprocess per image at shape (1, 3, 640, 480)

0%| | 0/5 [00:00<?, ?it/s]

20%|██ | 1/5 [00:00<00:02, 1.39it/s]

40%|████ | 2/5 [00:01<00:01, 1.50it/s]

60%|██████ | 3/5 [00:01<00:01, 1.60it/s]

80%|████████ | 4/5 [00:02<00:00, 1.80it/s]

100%|██████████| 5/5 [00:02<00:00, 2.12it/s]

100%|██████████| 5/5 [00:02<00:00, 1.85it/s]

Decoding latents in cuda:0...

done in 0.57s

Move latents to cpu...

done in 0.0s

Uploading outputs...

Finished.