Version: v1.0

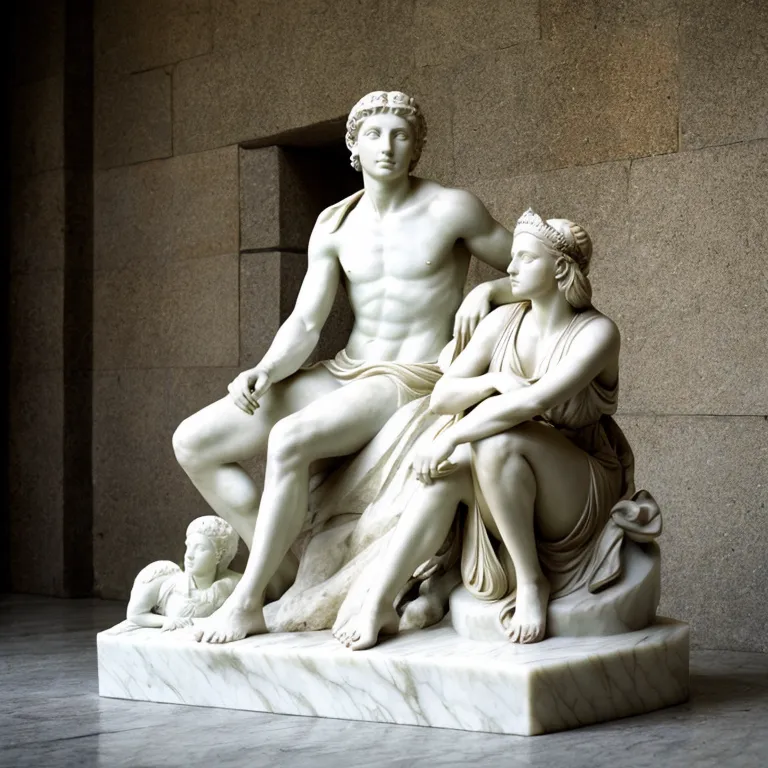

Input

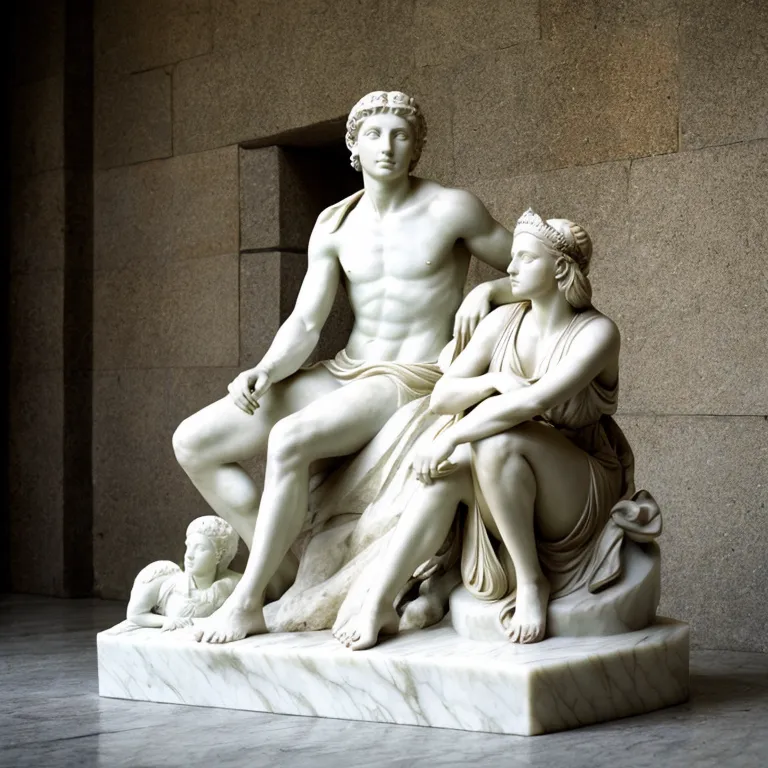

Output

This example was created by evevalentine2017

Finished in 104.3 seconds

Setting up the model...

Preparing inputs...

Processing...

Full prompt: old gold, (Marble sculpture), greek empress, toga, moody and Psychedelic ibex, Gopnik and Kidcore, luminescent, stunning, 50mm,

Full negative prompt: nsfw, logo, text, ng_deepnegative_v1_75t, rev2-badprompt, verybadimagenegative_v1.3, mutated hands and fingers, poorly drawn face, extra limb, missing limb, disconnected limbs, malformed hands, ugly,

0%| | 0/20 [00:00<?, ?it/s]

5%|▌ | 1/20 [00:01<00:24, 1.28s/it]

10%|█ | 2/20 [00:03<00:29, 1.65s/it]

15%|█▌ | 3/20 [00:05<00:29, 1.74s/it]

20%|██ | 4/20 [00:06<00:29, 1.82s/it]

25%|██▌ | 5/20 [00:08<00:27, 1.83s/it]

30%|███ | 6/20 [00:10<00:25, 1.84s/it]

35%|███▌ | 7/20 [00:12<00:23, 1.83s/it]

40%|████ | 8/20 [00:14<00:21, 1.77s/it]

45%|████▌ | 9/20 [00:15<00:19, 1.76s/it]

50%|█████ | 10/20 [00:17<00:17, 1.79s/it]

55%|█████▌ | 11/20 [00:19<00:15, 1.76s/it]

60%|██████ | 12/20 [00:21<00:13, 1.73s/it]

65%|██████▌ | 13/20 [00:22<00:12, 1.73s/it]

70%|███████ | 14/20 [00:24<00:10, 1.70s/it]

75%|███████▌ | 15/20 [00:26<00:08, 1.68s/it]

80%|████████ | 16/20 [00:27<00:06, 1.64s/it]

85%|████████▌ | 17/20 [00:29<00:04, 1.63s/it]

90%|█████████ | 18/20 [00:30<00:03, 1.62s/it]

95%|█████████▌| 19/20 [00:31<00:01, 1.42s/it]

100%|██████████| 20/20 [00:32<00:00, 1.18s/it]

100%|██████████| 20/20 [00:32<00:00, 1.62s/it]

Decoding latents in cuda:0...

done in 0.96s

Move latents to cpu...

done in 0.02s

0: 640x640 1 face, 7.6ms

Speed: 3.8ms preprocess, 7.6ms inference, 24.6ms postprocess per image at shape (1, 3, 640, 640)

This example was created by evevalentine2017

Finished in 104.3 seconds

Setting up the model...

Preparing inputs...

Processing...

Full prompt: old gold, (Marble sculpture), greek empress, toga, moody and Psychedelic ibex, Gopnik and Kidcore, luminescent, stunning, 50mm,

Full negative prompt: nsfw, logo, text, ng_deepnegative_v1_75t, rev2-badprompt, verybadimagenegative_v1.3, mutated hands and fingers, poorly drawn face, extra limb, missing limb, disconnected limbs, malformed hands, ugly,

0%| | 0/20 [00:00<?, ?it/s]

5%|▌ | 1/20 [00:01<00:24, 1.28s/it]

10%|█ | 2/20 [00:03<00:29, 1.65s/it]

15%|█▌ | 3/20 [00:05<00:29, 1.74s/it]

20%|██ | 4/20 [00:06<00:29, 1.82s/it]

25%|██▌ | 5/20 [00:08<00:27, 1.83s/it]

30%|███ | 6/20 [00:10<00:25, 1.84s/it]

35%|███▌ | 7/20 [00:12<00:23, 1.83s/it]

40%|████ | 8/20 [00:14<00:21, 1.77s/it]

45%|████▌ | 9/20 [00:15<00:19, 1.76s/it]

50%|█████ | 10/20 [00:17<00:17, 1.79s/it]

55%|█████▌ | 11/20 [00:19<00:15, 1.76s/it]

60%|██████ | 12/20 [00:21<00:13, 1.73s/it]

65%|██████▌ | 13/20 [00:22<00:12, 1.73s/it]

70%|███████ | 14/20 [00:24<00:10, 1.70s/it]

75%|███████▌ | 15/20 [00:26<00:08, 1.68s/it]

80%|████████ | 16/20 [00:27<00:06, 1.64s/it]

85%|████████▌ | 17/20 [00:29<00:04, 1.63s/it]

90%|█████████ | 18/20 [00:30<00:03, 1.62s/it]

95%|█████████▌| 19/20 [00:31<00:01, 1.42s/it]

100%|██████████| 20/20 [00:32<00:00, 1.18s/it]

100%|██████████| 20/20 [00:32<00:00, 1.62s/it]

Decoding latents in cuda:0...

done in 0.96s

Move latents to cpu...

done in 0.02s

0: 640x640 1 face, 7.6ms

Speed: 3.8ms preprocess, 7.6ms inference, 24.6ms postprocess per image at shape (1, 3, 640, 640)